Are You Taking Lightweighting Too Far?

“Lightweighting” is a hot topic in mechanical design. In simple terms, it means using various CAD/CAE tools such as finite element design (FEA) to model and simulate a design, then selectively remove or thin material, rerun the simulation, and see if the design is still viable. The goal is to minimize material, thus reducing size and weight while simplifying production (hopefully) and reducing raw materials cost.

More colloquially, we basically cut things as close as possible such that we can still get away with it. In principle, it’s a great idea; after all, reducing weight and cost are priorities in almost every project.

In the “bad old days” before we had these powerful simulation and modeling tools, designers would leave in or even add a little extra for a safety margin, “just in case.” They used a combination of analysis, judgment, and experience to decide where that extra might be needed. Now, however, there’s pressure to minimize that margin, since the tools say all will be OK.

But will it be OK? Every engineer knows that any simulation is only as good as the model, and every model has embedded assumptions and simplifications. This was all made very clear by a recent article by Tony Abbey, an FEA expert and instructor, in Digital Engineering, “FEA Demos and Benchmarks.”

He gives specific examples where some standard arrangements widely used in many FEA configurations simplify “little things” to ease their construction and analysis – yet doing so will have a major impact on the validity of the analysis.

Of course, it’s not just mechanical design that is prone to this weakness: electronic designs have the same issues, only less dramatic than a failed joint, bracket, or member. Even if the model somehow faithfully includes the parasitics, how do you assign values to them? Do these values change over time and temperature? What’s their deviation from nominal due to normal materials and manufacturing variations? Even with a properly designed Monte Carlo simulation of their design, it’s nearly impossible to run it across all possible variations.

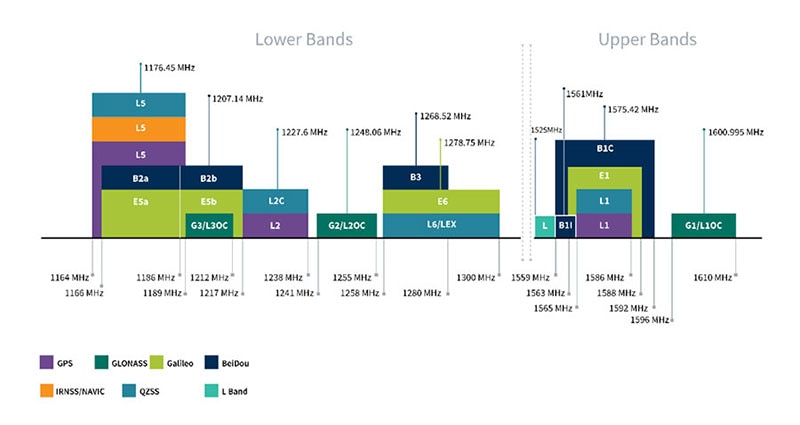

Figure 1: The inevitable dissipation of a resistor will cause an increase in its temperature, which causes its actual resistance to shift from the nominal value; the graph shows the rapid increase in temperature as the resistor dissipation approaches its rated power level. (Image source: TT Electronics)

Figure 1: The inevitable dissipation of a resistor will cause an increase in its temperature, which causes its actual resistance to shift from the nominal value; the graph shows the rapid increase in temperature as the resistor dissipation approaches its rated power level. (Image source: TT Electronics)

Model validity is not only an RF issue; it’s a DC one as well. Consider the ubiquitous current-sense resistor, which is usually in the milliohm range. In principle, this can be realized by using a short piece of copper wire. While that will work, it won’t work well for very long due to self-heating and the resultant change in the copper’s resistance. Basic copper has a temperature coefficient of resistance (TCR) of about 4000 ppm/°C, so a 1 milliohm (mΩ) resistor undergoing a modest 50°C rise will soon be a 1.2 mΩ unit – a 20% shift (Figure 1).

A design which is validated by looking at electronic performance only, without factoring in thermal effects, will be marginal at best. Where there are multi-physics tools such as Comsol which allow for linking and cross-modeling electronic, magnetic, thermal, and mechanical analysis, they still require insight into these correlations.

That’s why vendors of specialty components such as Vishay Dale offer special, low TCR resistors, based on complex materials and processes. For example, their WSBS8518 series of power metal strip resistors has a TCR between ±110 and ±200 ppm/°C (depending on nominal resistance value), while their WSLP series is even better with a TCR as low as ±75 ppm/°C. There are also highly specialized resistors with single digit TCRs from other sources, intended for high-end metrology applications.

A little designer humility is generally a good thing. Unless you really, truly know that your modeling is good enough, add a little extra margin in your design. If the dissipation calculation says you need a 0.22 watt resistor, maybe you should go for a ½ watt unit rather than a just-makes-it ¼ watt one. Designers in those pre and early modeling days knew they didn’t know everything, so they routinely added substantial margins for performance and safety rather than going for extreme lightweighting.

Today’s powerful tools and models can easily lead to designer’s hubris, thinking and acting as if we know a lot more than we do. Realistically, assessing your level of confidence in the model and simulation, then factoring that into the design and BOM, can be a very smart move.

Have questions or comments? Continue the conversation on TechForum, Digi-Key's online community and technical resource.

Visit TechForum